Discover the Cutting Edge with the Graphics and Vision Group!

Join the GV team at Stack B from 2 pm to 5 pm for a behind-the-scenes lab tour and explore their groundbreaking work in visual computing! The Graphics & Vision Group at Trinity College Dublin specializes in computer graphics, virtual human animation, augmented and virtual reality, and computer vision. Their research drives innovative solutions with diverse, real-world applications, from real-time rendering to complex simulations.

Graphics and Vision, School of Computer Science and Statistics

Explore the Future of Visual Computing with a Lab Tour from 2 pm to 5 pm, where you can witness cutting-edge technology in action! Experience the world of virtual human animation, augmented and virtual reality, and real-time rendering firsthand. Learn about the innovative projects pushing the boundaries of computer graphics and vision and see how our research shapes the future of immersive technology.

Virtual Gallery Assistants: Exploring Embodied Conversational AI in Art Spaces

Rose Connolly will guide visitors through an interactive demo in which virtual gallery assistants powered by Inworld AI enhance their visit with expert insights into art, history, and culture. These lifelike conversational agents offer real-time guidance, answering questions and providing in-depth information on artworks, artists, and techniques. Through a fully immersive virtual reality experience, you’ll see how these AI-driven assistants transform digital art spaces, making the experience educational and engaging.

Capturing 360˚ Room Acoustics and Music Performances for Immersive Virtual Reality Experiences

Join Mauricio Flores Vargas as he demonstrates how sound and space merge in virtual reality. In this "Multimodal Perception of Acoustic Virtual Environments" demo, high-order Ambisonics microphones will capture 360˚ sound. Visitors can explore virtual environments where their voices dynamically interact with the space's acoustics. This innovative technology fosters fully immersive experiences and is part of our ongoing research into the critical role of audio in immersive performance and storytelling, making your in-person participation crucial.

Dog Code: Human to Animal Motion in VR

Alberto Jovane will demonstrate how human movements can be translated into realistic animal motions in virtual reality (VR). Most VR systems that let people embody animals don’t feel very realistic. To fix this, we created a new method that translates human movements into natural animal motions, like those of a quadruped (four-legged creature). Instead of directly converting human motion, we use an intermediate step to make the process smoother and more accurate. This leads to lifelike animal movements that match the user's actions, creating a more immersive experience in virtual reality.

Animation from Video: Reconstructing Human Motion in Virtual Spaces

Nivesh Gadipudi will demonstrate how skeletal animation can be extracted from short video clips to create animated SMPL models. This technology provides a fascinating glimpse into reconstructing human motion from video data. In a discussion by Nivesh and Colm O'Fearghail, the team will delve into the unique Volumetric Video (VV) capture space, explaining the methods behind capturing and reconstructing human motion. This ties together the technical and creative aspects of the project, offering insights into the future of animated VR avatars and immersive experiences.

Co-living with Embodied AI: Integrating Virtual Humans into Real-World Environments

Join Yuan He in an exciting exploration of how virtual humans and real people can co-exist. In this interactive demonstration, you’ll experience how advanced AI, powered by large language models, allows natural conversations and social interactions between humans and virtual beings. Unlike traditional AI, these virtual humans have lifelike visuals and can move dynamically, interacting with people and the physical world in a mixed-reality setting. You’ll also see how embodied AI can function as interactive characters in gaming, navigating real-world environments and enhancing the immersive experience. Your participation will help reveal the growing role of AI in our daily lives.

Volumetric Avatars in VR: Bridging Reality and Virtuality

Experience cutting-edge volumetric technology with a Gaussian Splat avatar of a real person, presented in a splatting format and viewable on Meta Quest. The immersive demo allows audiences to stand side-by-side with a person's avatar in the same room. This juxtaposition serves as a springboard to explore our work in volumetric reconstructions and the broader implications for interactive storytelling. In the demo, Yinghan Xu will showcase Xuyu Li’s reconstructed virtual environment featuring the avatar. This setup highlights both novel human and environmental reconstructions, encouraging a discussion on how virtual spaces and their inhabitants can be brought to life with virtual humans.

Exploring Volumetric Spaces in VR with Gaussian Splat Environments

Xuyu Li has developed a demo showcasing Gaussian Splat environments on Meta Quest, using Scaniverse, a commercially available tool for 3D captures. This demo provides an exciting opportunity to explore how everyday spaces, such as those in Stack B, can be captured and transformed into immersive VR experiences. Come to the “real” Stack B to discuss Xuyu’s research on the intersections between 3D technology and innovations in immersive virtual environments.

Exploring Real-Time Virtual Production and In-Camera VFX

Join Stefano Gatto for a demonstration of the latest advancements in production technology. He will focus on how LED displays revolutionize filmmaking by enabling real-time, In-Camera Visual Effects (ICVFX). He will explore how these technologies blend live-action with virtual environments, creating immersive cinematic experiences. This demo will offer a behind-the-scenes look at the future of filmmaking, where digital and physical worlds meet in real-time.

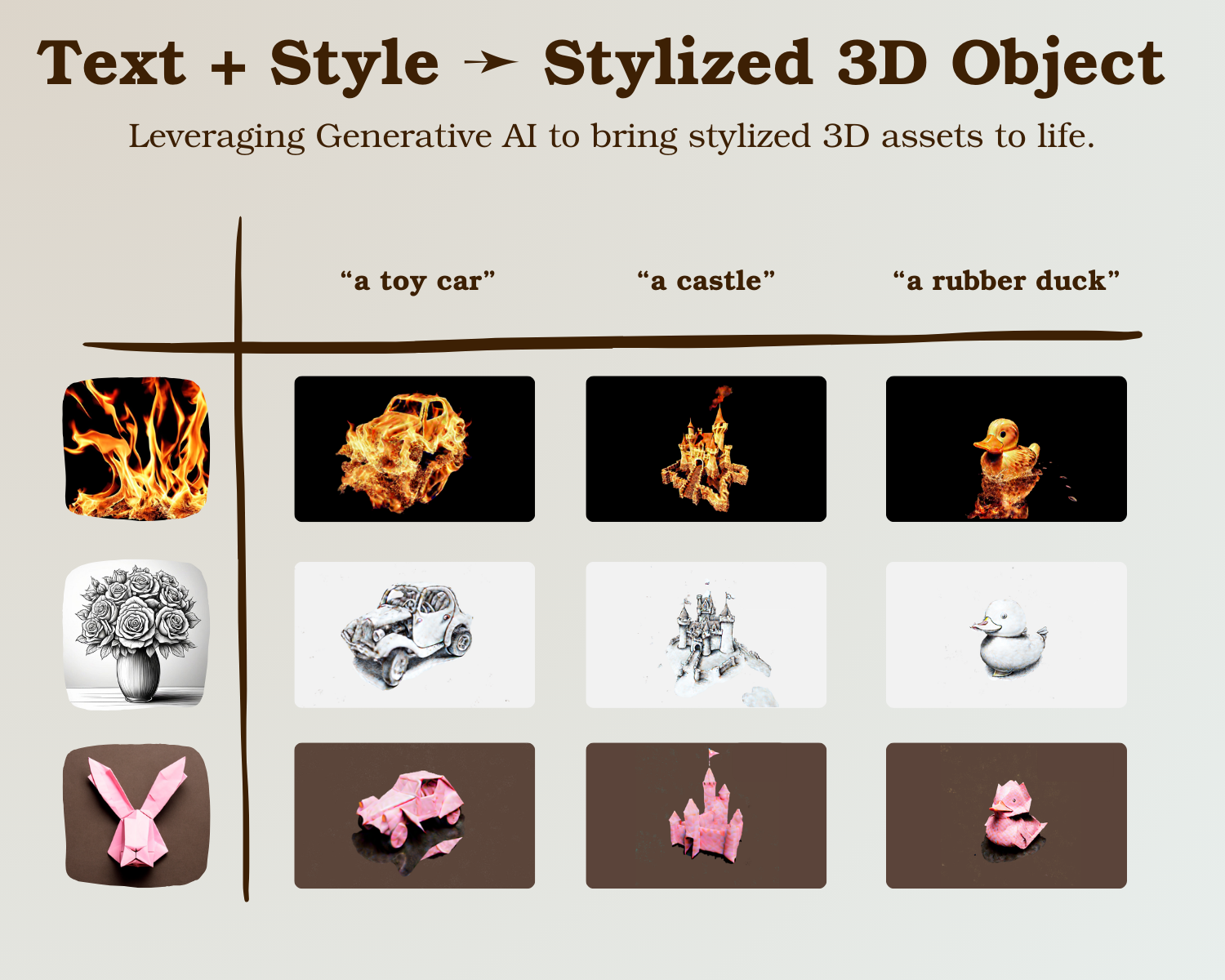

Dream-in-style: Leveraging Generative AI to Bring Stylized 3D Assets to Life

Hubert Kompanowski will introduce you to an innovative method for generating 3D objects in unique styles using generative AI. This demo reveals how simple text prompts and style references can be transformed into stunning 3D models. Unlike traditional methods, Hubert’s approach combines text and image inputs to produce visually appealing designs in various styles, showcasing the exciting potential of AI-driven creativity in 3D asset creation.